The Era of Smart Glasses:

What opportunities lie ahead for journalism?

LE QUOC MINH

Member of the Party Central Committee; Editor-in-Chief of Nhan Dan Newspaper; Deputy Head of the Party Central Committee’s Commission for Communications, Education and Mass Mobilisation; President of the Viet Nam Journalists Association

Around 2013, during a meeting with Google’s Vice President for the Asia-Pacific region, I had the opportunity to experience Google Glass — a device I had previously only read about on technology news sites and which had yet to reach the market. It bore little resemblance to ordinary eyewear, instead evoking something straight out of a science fiction film.

At the time, its functions were fairly limited, essentially confined to taking photos and recording videos, yet its very existence seemed almost inconceivable. Unfortunately, after a few years the project collapsed, and information about smart glasses gradually faded from public attention.

Ten years later, Meta partnered with eyewear brand Ray-Ban to launch a pair of smart glasses that look no different from conventional ones. I was determined to get hold of a pair to see just how impressive they were. Beyond the ability to listen to music, like some other smart glasses, they allow users to take photos and record one-minute videos, which can then be shared on Meta-owned platforms such as Facebook, Instagram, and WhatsApp. They can even livestream on Facebook, without the user having to hold up a smartphone.

These glasses went on to trigger a subsequent race in the smart glasses market.

A key distinction lies in the fact that the Meta Ray-Ban glasses are integrated with AI and can be voice-controlled, making them notably convenient. And perhaps it was precisely this product that ignited the smart glasses race that followed.

Technology experts assert that 2026 marks a historic turning point, when smart glasses will no longer be a luxury tech toy but will gradually become an essential device, threatening the long-standing dominance of the smartphone. The explosion of artificial intelligence (AI), coupled with major advances in AR lens technology, has transformed slim-framed glasses into “supercomputers” worn on the face.

The development of smart glasses

The history of smart glasses is a long journey, from laboratory concepts to today’s trillion-dollar race among major technology giants. This process can be divided into four main stages:

The early stage: Laying the first foundations (20th century to 2010)

Before the modern concept of “smart glasses” took shape, scientists had already laid the groundwork with head-mounted displays (HMDs).

In 1968, Ivan Sutherland, a professor at Harvard University, created the first augmented reality headset known as the “Sword of Damocles”. So heavy that it had to be suspended from the ceiling, it could only display simple wireframe geometric shapes. In the 1990s, Steve Mann — widely regarded as the father of wearable computing — developed EyeTap, a wearable device that enabled video recording and the overlaying of data onto the user’s vision.

(Source: https://mannlab.com/eyetap)

Despite the initial excitement, Google Glass was discontinued for individual consumers in 2015 amid concerns over privacy.

The boom and the “fall” of Google Glass (2012–2015)

This was the period when smart glasses truly emerged into the public eye and became the focus of widespread attention. In 2012, Google officially unveiled Google Glass. The device was capable of taking photos, recording videos, and displaying notifications via a small prism display positioned above the right eye.

Despite the initial excitement, Google Glass was soon discontinued for individual consumers in 2015 due to privacy concerns (covert recording), its prohibitively high price (1,500 USD at the time), a design widely considered eccentric (the term “Glasshole” emerged), and poor battery life.

The stage of specialisation and audio integration (2016–2021)

After Google’s failure, the market clearly split into two directions.

1/Specialised applications (B2B): Google Glass Enterprise and Microsoft HoloLens focused on supporting engineers, doctors and industrial training.

2/Audio and fashion-oriented products: In 2016, Snapchat launched Spectacles, a simple pair of glasses designed solely for short video recording and social media sharing.

Three years later, Amazon and Bose introduced the Echo Frames, which focused on audio and the Alexa virtual assistant, completely removing the display in favour of fashion, lightness and comfort.

Generative AI technology has breathed new life into wearable devices.

The era of AI and augmented reality (2022 to present)

Generative AI technology has breathed new life into wearable devices. The collaboration between Meta and Ray-Ban (2023–2024) created the most significant breakthrough. Smart glasses now resemble ordinary fashion eyewear, yet integrate high-quality cameras and AI capable of “seeing” the world alongside the user.

Photo: squaredtech.co

Photo: squaredtech.co

Meanwhile, Google has re-entered the race with its Android XR platform and the Gemini assistant, promising deep integration between computer vision and multimodal AI. Chinese companies such as Baidu, Xiaomi and Alibaba (with Quark AI Glasses) have also launched competitively priced products, turning AI glasses into the next “billion-dollar pie”.

Current breakthroughs

Below is an overview of the most groundbreaking smart glasses, widely expected to shape our lifestyle in 2026.

Android XR Glasses: The “destroyer” of display space

The project codenamed Project Aura (Android XR Glasses) is currently leading the market, backed by the Google ecosystem.

Its greatest advantage lies in its superior display capability, with a field of view (FOV) of up to 70 degrees, effectively transforming the user’s entire vision into a vast working screen.

Another notable feature is the integration of Gemini AI and gesture-based interaction.

The virtual assistant can understand real-world context through the camera, support real-time translation and provide Google Maps navigation directly on the lenses.

Another notable feature is the integration of Gemini AI and gesture-based interaction.

Users can control applications by “touching” the air, thanks to a highly accurate hand-tracking sensor system. A slimmer and lighter version is also available, focusing on comfort and suitability for all-day wear in office environments.

Meta Ray-Ban Display: The perfect blend of display and fashion

Meta Ray-Ban Display is more than just an ordinary pair of smart glasses. It is regarded as a pioneering product that establishes an entirely new segment, positioned between audio-only glasses (such as Ray-Ban Meta Gen 2) and bulky augmented reality (AR) headsets like HoloLens.

Users do not need to touch the frame or speak voice commands aloud in public. Instead, the glasses are controlled via an electromyography-based wristband known as a neural band.

Meta Ray-Ban Display is not merely a conventional pair of smart glasses.

While many smart glasses use AI mainly for calls and voice interaction, Meta Ray-Ban Display leverages AI to support vision, giving users a powerful “visionary AI” experience. When facing a foreigner or reading signage, the glasses display translated subtitles directly in front of the wearer’s eyes in real time. Instead of listening to turn-by-turn directions through a speaker, users see navigation arrows overlaid directly on the route they are walking.

Unlike previous generations, the in-glass display allows users to preview the shooting angle of the 12MP camera, ensuring perfect POV photos and videos.

The latest software update in early 2026 added teleprompter and handwriting recognition features and, naturally, enables control via a neural band.

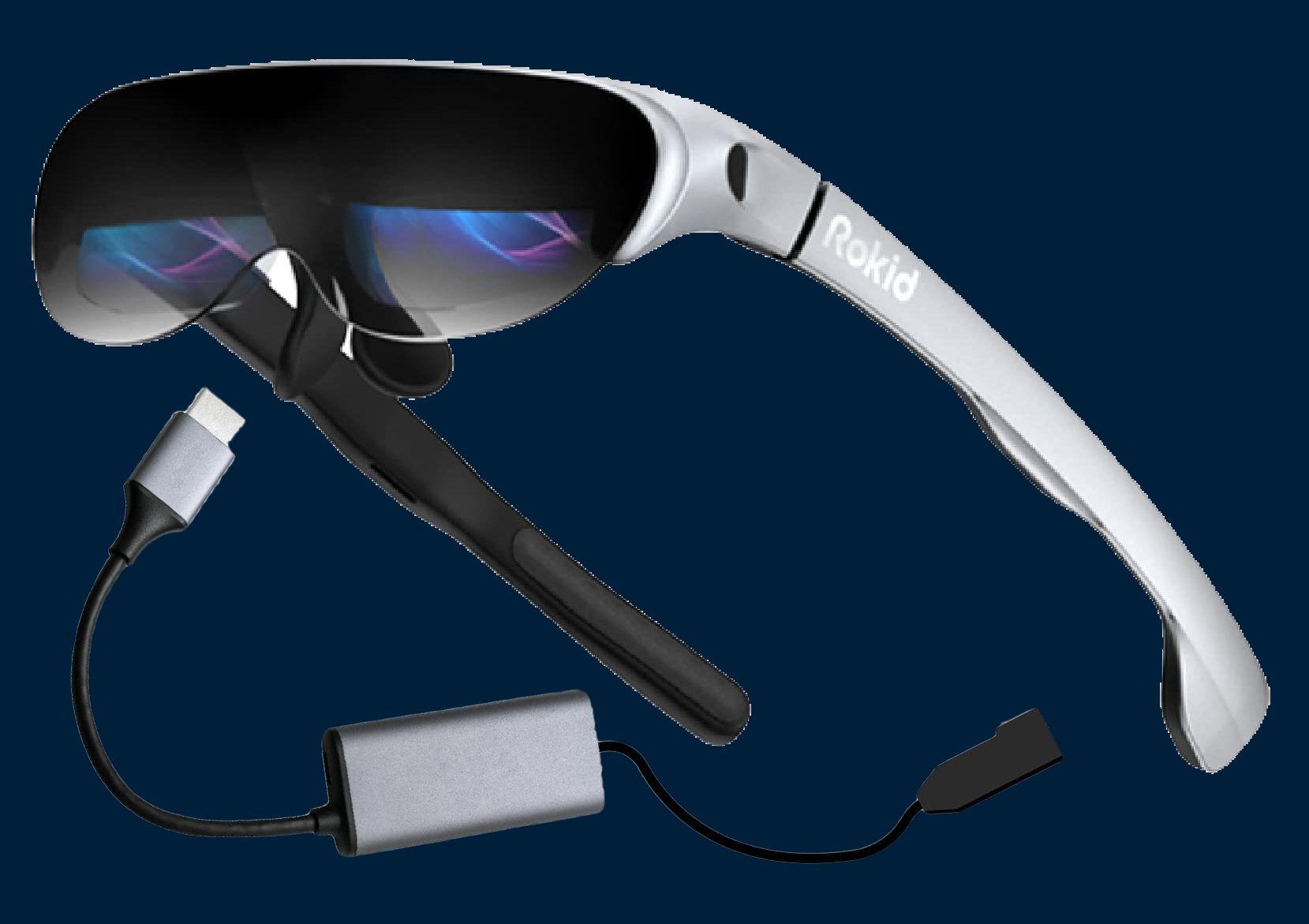

Snapchat Spectacles and Rokid: Creativity and Productivity

While major players focus on operating systems, companies such as Snapchat and Rokid are targeting niche yet highly practical needs.

The new-generation Snapchat Spectacles offer six degrees of freedom (6 DOF), allowing users to play augmented reality games with friends in real-world spaces. This is an ideal device for content creators seeking to share AR experiences in the most intuitive way.

In the productivity segment, Rokid AI Glasses stand out with the integration of ChatGPT-5. These glasses function as a personal assistant: serving as a teleprompter for presentations and as an intelligent recorder capable of instantly summarising meeting minutes. They support translation in nearly 90 languages and are not restricted in certain markets, unlike some of Meta’s latest glasses.

The new-generation Snapchat Spectacles offer six degrees of freedom (6 DOF), allowing users to play augmented reality games with friends in real-world spaces.

Apple and Amazon: The ecosystem battle

Although Apple keeps things secret until the last minute, leaked sources suggest that 2026 will be a crucial stepping stone before the company launches a fully-fledged AR glasses product in 2027. Apple’s strategy is reportedly split into two tracks: one model focused entirely on audio and Siri (without a display), and another featuring a premium display deeply integrated with Apple Health and Maps.

(Source: theworkersunion.com)

(Source: theworkersunion.com)

Amazon Smart Glasses focus on practicality.

By contrast, Amazon Smart Glasses prioritise practical use. Rather than attempting to replace vision, Amazon’s glasses optimise the audio experience, enabling users to listen to music, Audible audiobooks, and shop via Alexa naturally while on the move.

(Source: rokid)

(Source: rokid)

Why is 2026 the golden moment for smart glasses?

With the participation of major names such as Google, Apple, Amazon and Meta, alongside emerging players like Alibaba and Techno, smart glasses are expected to transform how people interact with the world. We are moving closer to a future in which smartphone screens are no longer the sole focus of human attention.

Smart glasses are set to break through in 2026 thanks to the convergence of three key factors:

1.

Real-time AI

AI is no longer limited to dry, text-based answers; it can now “see” alongside users, offering suggestions about menu items or information about the person they are meeting.

2.

Ergonomic design

The weight of the glasses has been reduced to a level comparable to regular glasses, supporting prescription lens replacement for short-sighted people, eliminating the heavy feeling of previous generations.

3.

AR sharing

The ability for many people to see and interact with a virtual object in the same room is opening a new era for remote education and teamwork.

Real-time AI is the core driving force behind the transformation of smart glasses in 2026 from a supplementary device into a powerful personal assistant, eliminating the latency between perception and action.

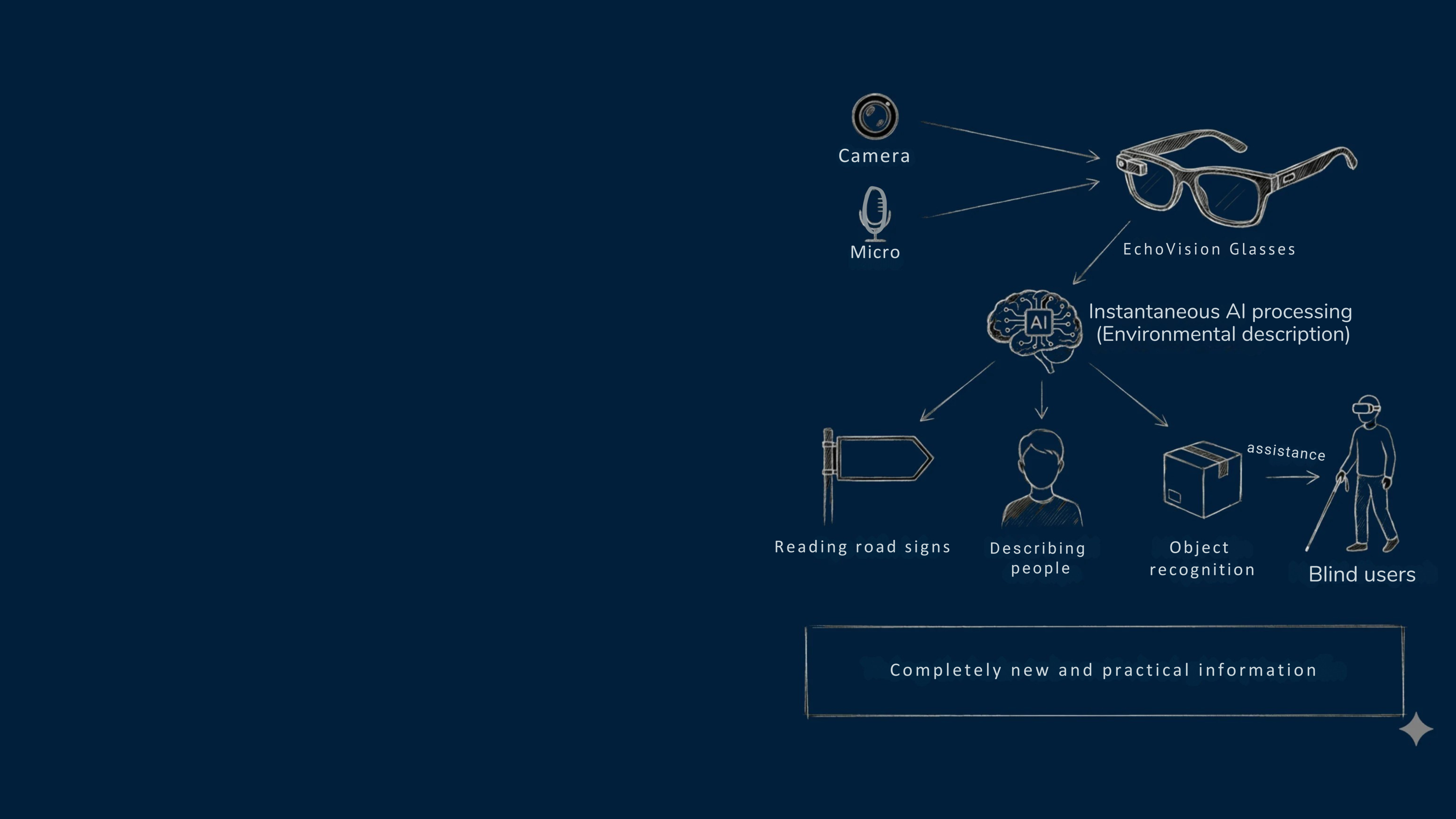

Real-time AI shapes the core value of smart glasses through the following roles:

1. Supporting contextual recognition and analysis:

AI processes input data from the camera and microphone instantly to describe the surrounding environment. For example, the glasses can identify objects, read road signs, or describe people in an instant, helping blind users (as in the case of EchoVision glasses) obtain completely new and practical information instead of just repeating what they have already seen.

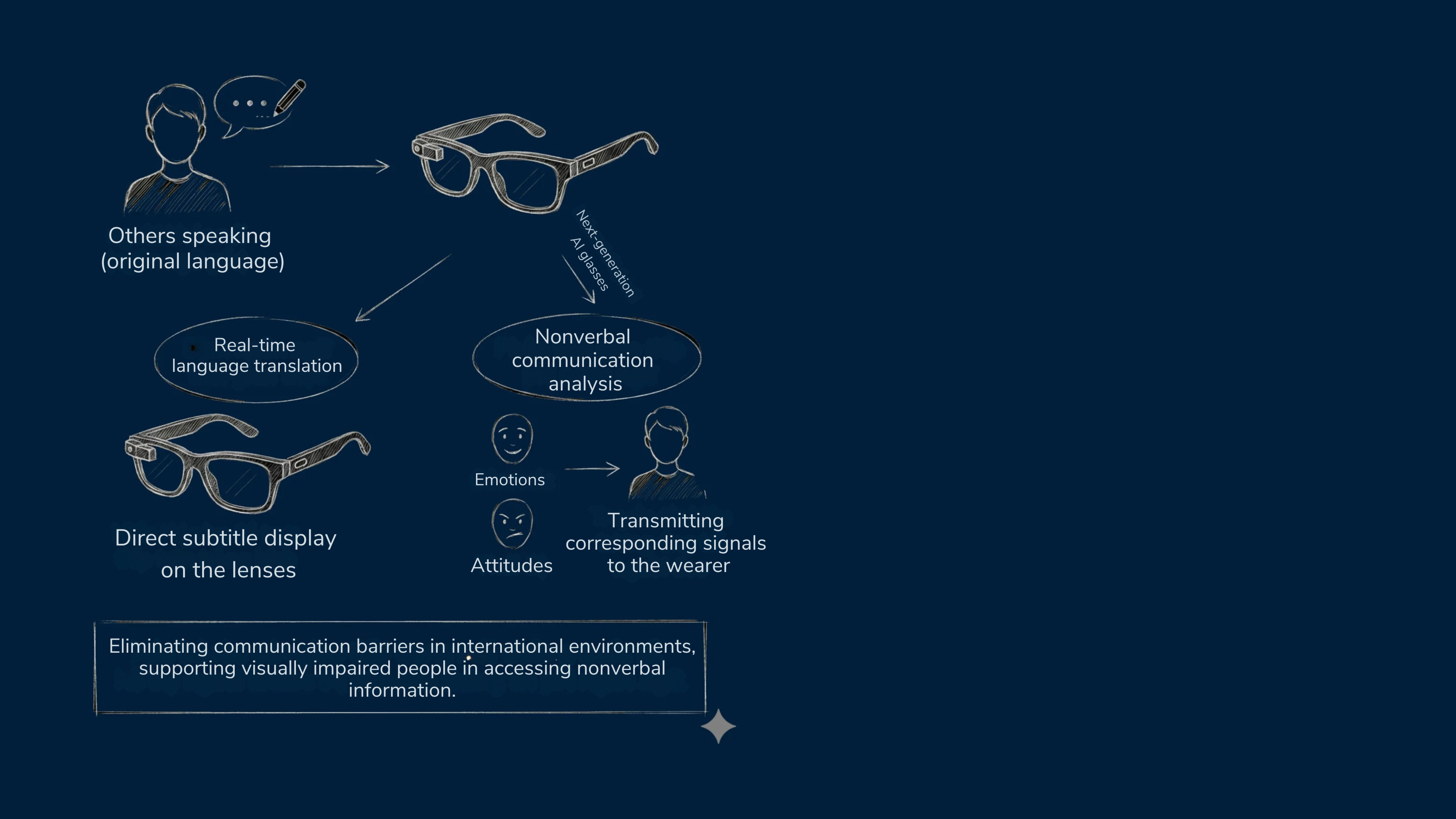

2. Instant translation and communication:

AI enables real-time language translation as soon as someone speaks, displaying subtitles directly on the lenses. This eliminates communication barriers in international environments. Next-generation AI systems can also analyse nonverbal communication (such as emotions and attitudes) and transmit corresponding signals to the wearer, something that visually impaired individuals often struggle with.

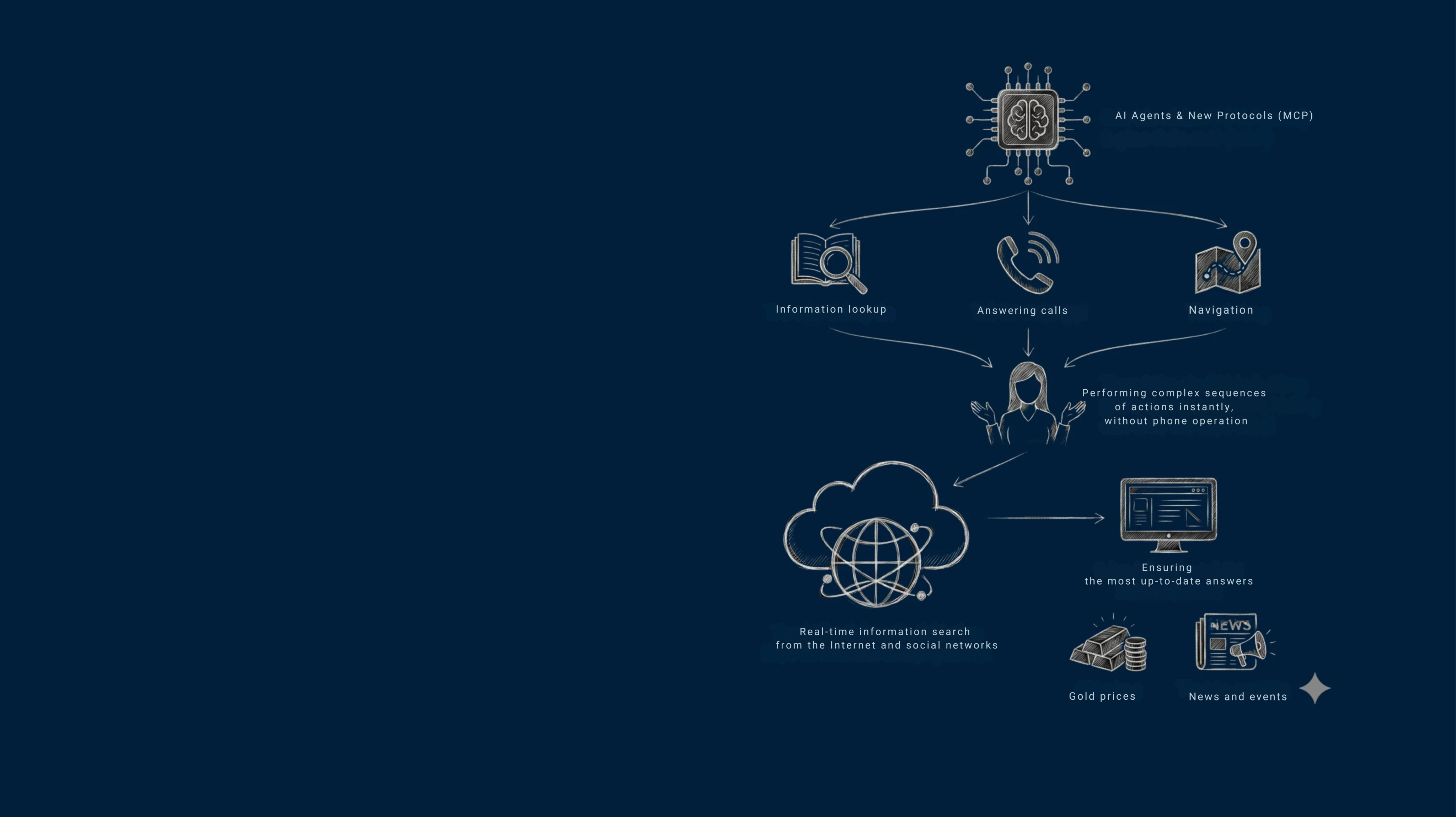

3. Immediate decision support (Agentic AI):

AI agents equipped with new connectivity protocols (such as MCP) can perform complex sequences of actions instantly, for example, looking up information, answering calls, or finding directions without the user having to operate the phone. The ability to search for real-time information from the Internet and social networks ensures the most up-to-date answers, relevant to queries such as gold prices or news and events.

Furthermore, it enhances the AR experience: AI controls 3D graphic models and digital overlays so that they interact vividly and realistically with the real-world environment, such as changing the surrounding scenery in real time with voice commands.

What direction will journalism take?

Smart glasses open up a new direction for journalism, shifting from creative information delivery to an “immersive experience” for readers. In the smart glasses race, journalism is not just about delivering news but also has the opportunity to become a strategic content provider, reshaping how the public consumes information.

Experts believe that integrating artificial intelligence into everyday eyewear has the potential to transform how people experience daily life and how they consume news. When combined with augmented reality (AR) technology, this innovation offers unprecedented levels of interaction.

Key applications:

1. Multi-sensory feature reporting: Readers can “travel” to the scene of events mentioned in news reports (for example, a sporting match, an international event, or a disaster-hit area). They not only see the scene but can also experience lifelike emotions through simulated images and sounds.

2. Investigative and in-depth journalism: In investigative reports or complex narratives, smart glasses allow users to observe every corner of the setting, enabling a clearer and more comprehensive understanding of the issues than is possible through 2D images or videos alone.

3. Real estate and geographical exploration: Instead of travelling long distances, readers can examine an entire building or individual show apartments via AR models projected directly before their eyes, delivering a more immersive and realistic experience.

4. Interactive reading and rapid summarisation: Thanks to AI-powered real-time text recognition, smart glasses can display captions or concise summaries of lengthy passages directly within the user’s field of vision, enabling faster and more efficient information absorption.

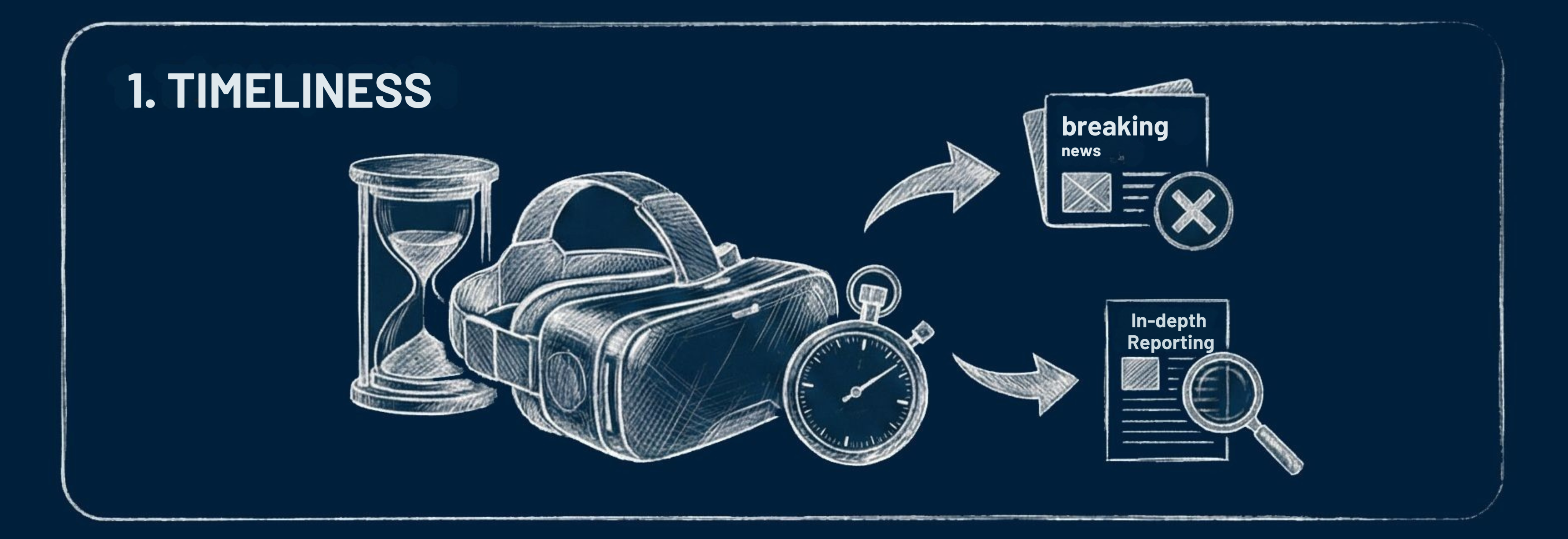

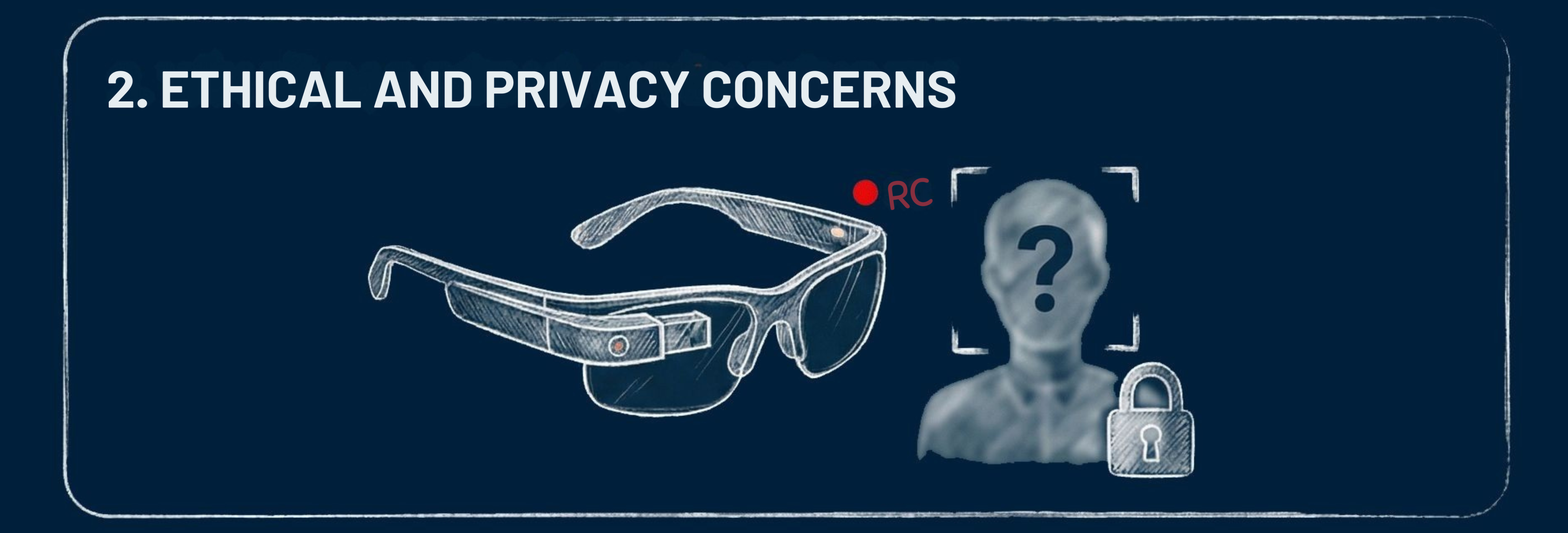

Despite their vast potential, journalism using smart glasses faces barriers similar to those confronting other AI eyewear products:

· Timeliness: Producing detailed AR/VR content requires considerable time, making immersive journalism less suited to breaking news and more appropriate for in-depth features and long-form reporting.

· Ethical and privacy concerns: The ability of AI glasses to record images and audio and to recognise faces raises concerns about whether individuals may be recorded without their knowledge, potentially undermining public trust.

· Cost and accessibility: The expense of producing high-quality devices and AR/VR content remains a significant barrier to mass adoption. There is also the risk that journalism could become dependent on, or controlled by, technology “giants” that dominate information distribution on smart glasses, in much the same way as Facebook and Google have done on the web.

The future success of smart journalism will depend on whether publishers can strike a balance between delivering immersive experiences and safeguarding the privacy rights of those being reported on.

History shows that smart glasses have evolved from bulky laboratory devices, through a period of social scepticism over privacy, to becoming fashionable and practical AI accessories today. There is no reason they cannot also become a platform for accessing information.

The race to develop smart glasses could represent an opportunity for journalism to reclaim its role as a “pathfinder”.

The race to develop smart glasses could represent an opportunity for journalism to reclaim its role as a “pathfinder”. By delivering information that is deeper, more accurate and more visual, news organisations willing to experiment early with AR and AI technologies may gain a decisive advantage in capturing the market over the next three to five years.

Published: February 2026

Design: Ngoc Diep – Thu Trang

Translation: NDO

(This article makes use of AI assistance in the design process)