Experts liken AI to “machines” created by technology engineers that mimic human behaviour and are increasingly approaching true intelligence.

Without careful design and ethical control, AI could lead to serious social consequences.

AI can perpetuate gender or racial discrimination if it is developed using biased datasets. It may also replace a significant number of jobs, posing a major challenge to the labour market. Persons with disabilities, those with limited access to technology, and ethnic minorities must be supported to ensure they are not left behind in the adoption of AI-integrated services.

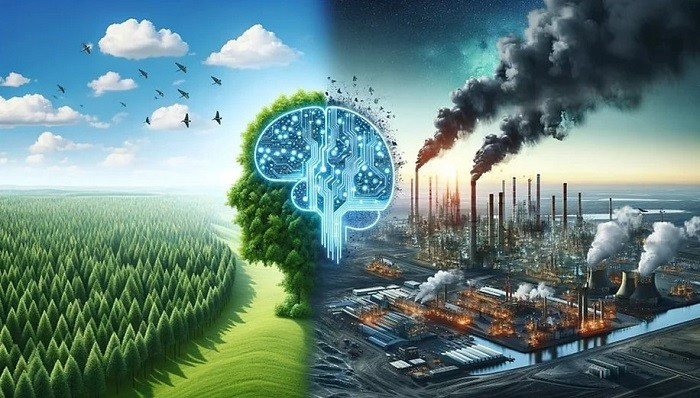

The development of AI also poses specific challenges to the environment and natural resources. Establishing and maintaining data centres, cloud computing infrastructure, and supercomputers requires vast amounts of electricity, water, and physical space for equipment.

In critical areas such as healthcare, accountability becomes a pressing question in the event of errors in diagnosis or treatment. Safeguarding patient information, protecting personal data in general, and ensuring data accuracy are all issues of particular concern.

These risks are not unique to Vietnam, but they are global in scope. Therefore, the development and application of AI must be approached with caution, accompanied by appropriate control and regulatory mechanisms.

AI ethics require individuals and organisations involved in AI development to take responsibility for designing, operating, and utilising these “machines” in a transparent, fair, and safe manner for users and society at large. Furthermore, AI ethics underline the collective role of the international community and individual nations in addressing the consequences of AI’s impact on life.

|

| AI development poses certain challenges on environment and resources. (Illustrative image) |

The Party and State of Vietnam have identified that the core purpose of digital transformation is to serve people, with a human-centric manner. Thus, alongside promoting development, emphasis has also been placed on minimising adverse impacts on citizens throughout the digital transformation process.

The National Assembly has called for the development of policies to support individuals affected by the Fourth Industrial Revolution in its ongoing revision of the Labour Law.

Vietnam has enacted the Data Law in 2024 and is currently drafting the Law on Personal Data Protection and amending the Cybersecurity Law to help manage the risks posed by AI.

Agencies and organisations within the political system have been exploring numerous solutions to make smart administrative services more accessible to the public. The operational model of the Hanoi Public Administration Service Centre is one such example.

However, a major challenge lies in striking a balance between innovation and compliance with AI ethics. Excessive regulation may stifle innovation, while lax oversight could result in the very consequences mentioned above.

Moreover, the simultaneous implementation of various scientific and technological development strategies and programmes requires careful planning and resource allocation, especially when resources are limited.

|

| The balance between innovation and AI ethical compliance is a challenge. (Illustrative image) |

In this context, proactive engagement from professional associations, businesses, research institutes, and social organisations—working in tandem with the Government to steer responsible AI development—is of great importance.

The National Data Association was officially launched on March 22, aiming to build a robust data ecosystem, contributing to the growth of the digital economy and increasing the value of data within the national economy.

Prior to this, the Vietnam Software and IT Services Association (VINASA) established the Artificial Intelligence Ethics Committee. This committee is conducting research to advise regulatory bodies on drafting an AI ethical code of conduct for businesses promoting AI development. At the same time, the committee has proposed the creation of standardised datasets and has called for foreign AI developers operating in Vietnam to adhere to specific criteria.

VINASA’s model should be expanded and encouraged, as AI ethics are not solely the concern of regulatory agencies, but a shared responsibility of the entire society.

In the long term, Vietnam should consider formulating a Responsible AI Development Strategy as part of its broader National Strategy on Artificial Intelligence. This strategy must ensure core values such as fairness, inclusiveness, transparency, safety, and accountability.

More importantly, compliance with the law is a mandatory requirement. Every individual and organisation must possess a deep awareness of their legal and ethical responsibilities in both thought and action when developing and applying AI.

The legal framework must continue to be refined to clearly define legal responsibilities, establish mechanisms for handling violations, and protect the legitimate rights and interests of stakeholders in the digital environment.

International experience also provides valuable reference points for Vietnam in building a suitable AI governance model. A well-developed legal system and effective monitoring mechanisms will help mitigate risks and prevent the misuse of technology without hindering innovation. Only then can AI truly become a tool for serving humanity and advancing social progress.